(This project is part of the Duke EGR101 class in Fall 2021. Contributed by Chengyang Zhou, Eric Song, Arsh Banipal, Bill Zheng, Darren Wu. Supervised by Dr Nan Jokerst and Wizeview. Inc)

Falls currently present a major health issue for older adults in society. In 2015, 50 billion dollars of medical bills were attributed to elderly falls. (CDC, 2015)

The risk of falling can be readily lowered through the implementation of fall risk assessments (FRAs). However, current manual assessments of fall risks exhaust valuable time and resources. While there have been attempts to streamline FRAs onto unsupervised platforms that do not require oversight by a healthcare professional, existing solutions often require specialized, extraneous equipment.

Wizeview, a US-based healthcare startup, aims to combat this issue. Therefore, the objective of this project is to help WizeView develop an unsupervised Fall Risk Assessment system that is accessible, low-cost, portable, efficient, and robust, enabling caregivers to provide accurate FRA scoring for elderly adults aged 65 years and above.

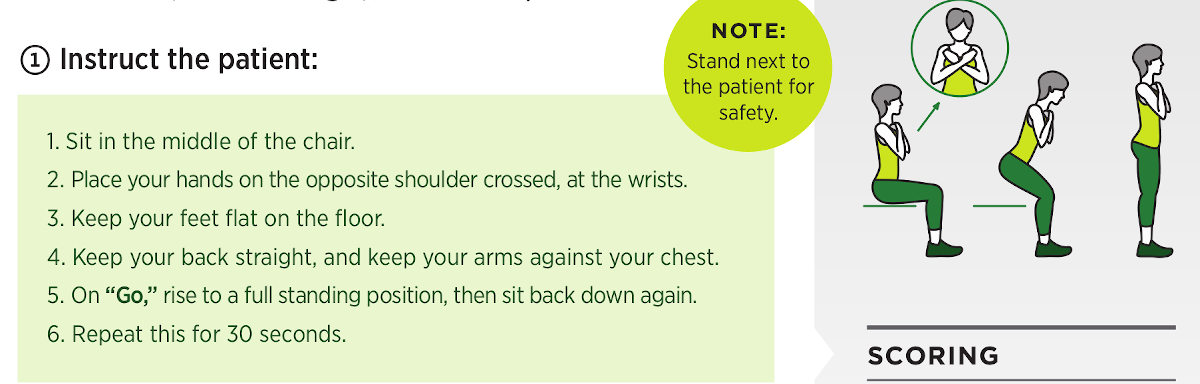

The Center for Disease Control and Prevention (CDC) recommends 3 standard fall risk assessments. The target assessment of this project is the 30 Second Chair Stand Test.

Here is how to conduct this test manually:

Design Criteria

Here are some criteria which must be met in order for this project to be a success.

- 1.Accuracy: within ±10% of accurate results determined by manual counting.

- 2.Robustness: our software should perform under different lighting conditions and backgrounds.

- 3.Accessibility: such that our target audience (elderly) can operate without assistance.

- 4. Speed: real-time counting is preferred.

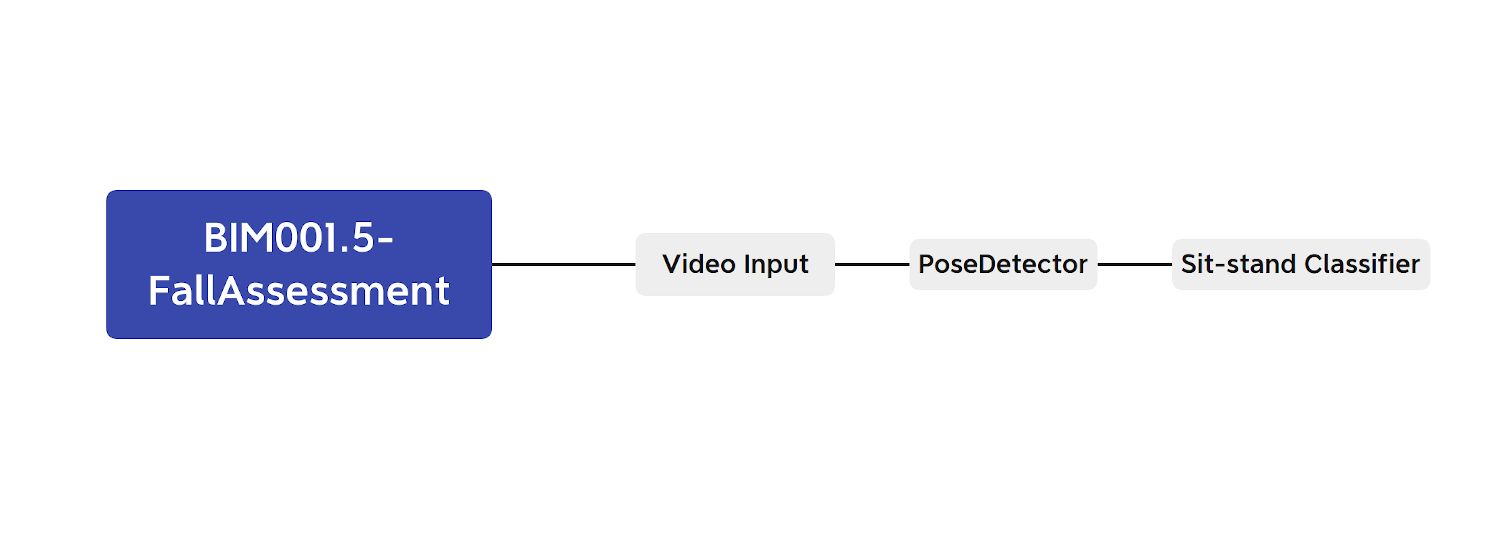

Overall Design

Fancy version of the approach: Pose-detection-based Sit-stand Classification using Computer Vision

TL,DR: Count sits and stands using AI

Pose Detection

We used the Mediapipe machine learning platform by Google. It incorporates a pose detection algorithm which skeletonizes a person to a set of key points.

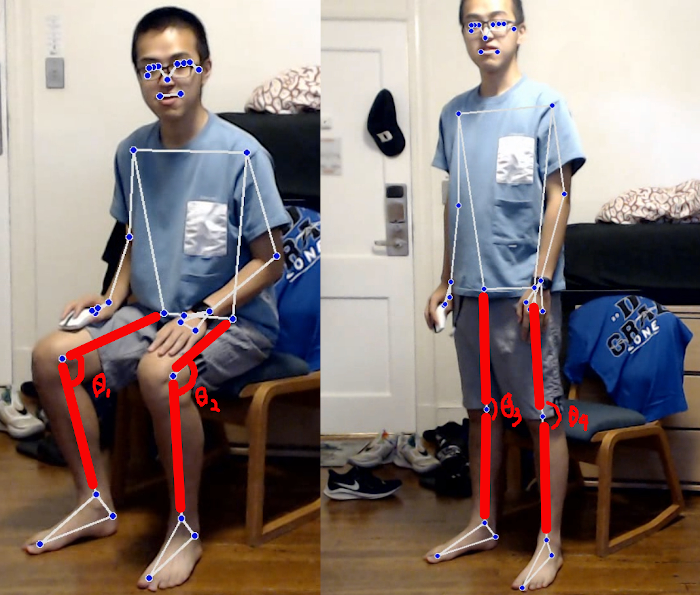

When we apply this algorithm to a sitting and standing respectively, we observe a conspicuous difference in the “stick-figures”generated, especially in the leg portion. (highlighted in red)

In technical terms, this distinction in feature vectors (in terms of leg orientations/knee angles) would be reflected in the high-dimensional space, making classification possible.

Note: since our classification relies heavily on the knee angles, patients should be asked to sit at an angle(30-60 degrees) from the camera.

Sit-stand Classification

We chose to use an exteremely simple K-nearest-neighbour (KNN) classifier for the following reasons:

- Easy to train: since every patient has different body sizes and is tested under different lighting conditions, we need to train a new classifier on the spot.

This requires the training/calibration process to be as quick as possible. - Quick inference: every frame of the video input will be passed into this classfier, so we should minimize the time for our model to output a prediction.

- Simple task: As illustrated, the feature vectors are easily distinguishable, so a 20-million-parameter Inception-ResNext would be an overkill.

Final Product

We used the python tkinter library to build a simple Window-based interface.

Welcome to Fall Assessment V1.13!

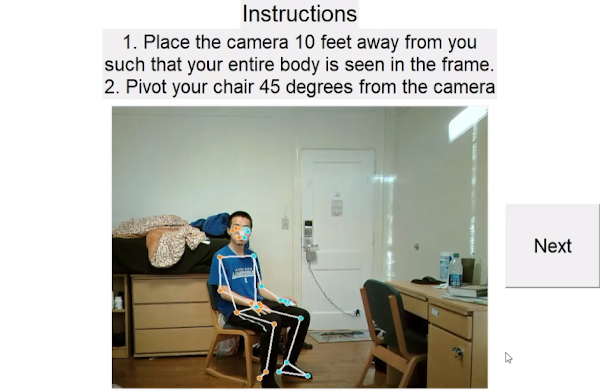

Click START, we come to the Instructions page, showing the elderly how to get ready for calibration.

Click next, we have the calibration page. Using the respective buttons, we take 10 pictures of the patient fully standing, and 10 fully seated. Clicking next will commence the classifier training,(takes <5s), and proceed to the pretest page.

When the patient is ready, click Start!!! The video input is fed into our pipeline of algorithms, first skeletonizie using Mediapipe, the classify using the pre-trained KNN-classifier. We threshold the confidence score, thus detecting every stand and sit.

Yay! Now we have an accurate and automated fall assessment system!